curl is a powerful, widely used open source command-line tool for transferring data using URLs. It supports a wide range of internet protocols like HTTP, HTTPS, FTP, and a few others.

Its developers run a bug bounty program in association with HackerOne, where security researchers can report vulnerabilities in exchange for rewards, helping the project quickly identify and resolve critical issues.

Sadly, the program has become swamped with AI slop, where people (or perhaps bots!?) are creating bug reports with AI-generated contents that don't offer any real value in helping the curl developers improve the tool.

Curl Faces The Plague of AI Slop

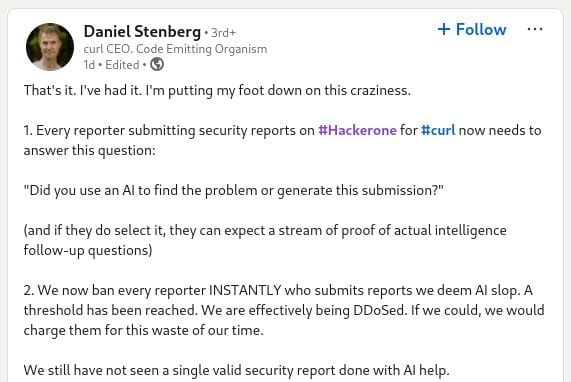

On LinkedIn, Daniel Stenberg, the creator of curl, has announced that going forward, if a bug report on curl's HackerOne page is found to be AI slop, then they will instantly ban the reporter.

He stated that:

We now ban every reporter INSTANTLY who submits reports we deem AI slop. A threshold has been reached. We are effectively being DDoSed. If we could, we would charge them for this waste of our time.

We still have not seen a single valid security report done with AI help.

If you were wondering whether this was justified?

The short answer is YES.

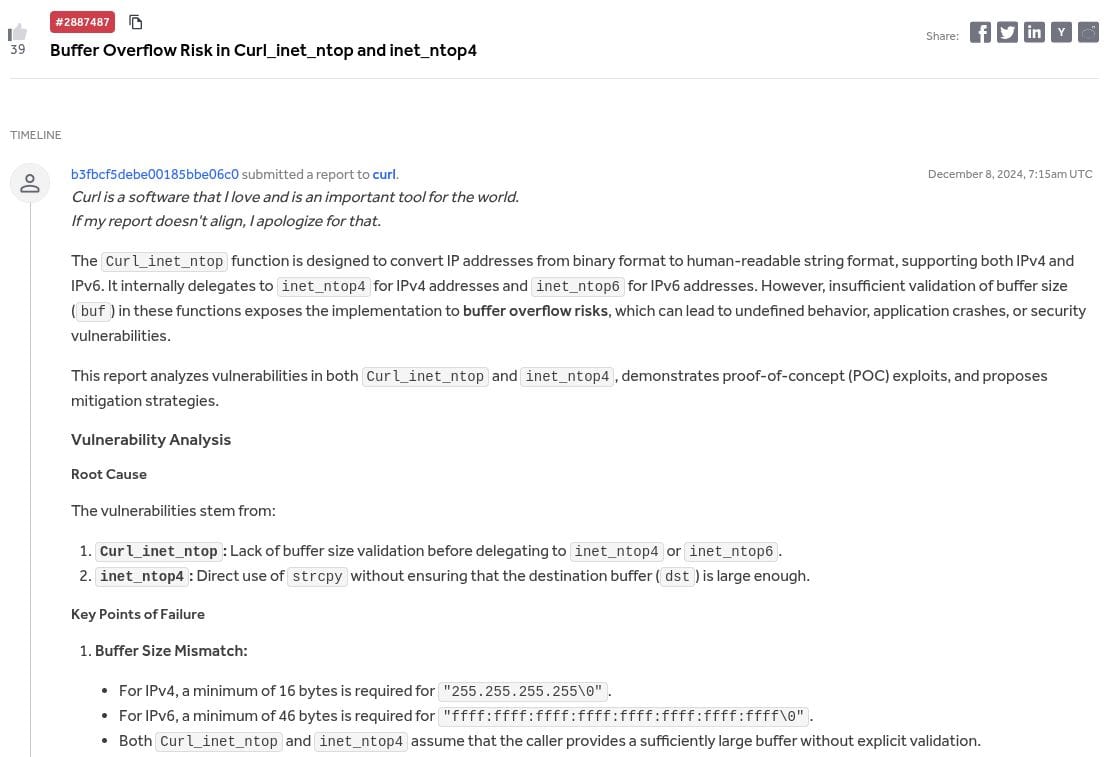

A prime specimen of AI slop on curl's HackerOne page.

A quick search led me to a bug report filed by a user named "apol-webug", where they posted a seemingly legit report for a Buffer Overflow Risk issue with curl.

But when I took a closer look at it, the language they used in it reminded me of how ChatGPT usually blurts out a wall of text, being all polite, and adding necessary junk text that isn't really required for the topic at hand.

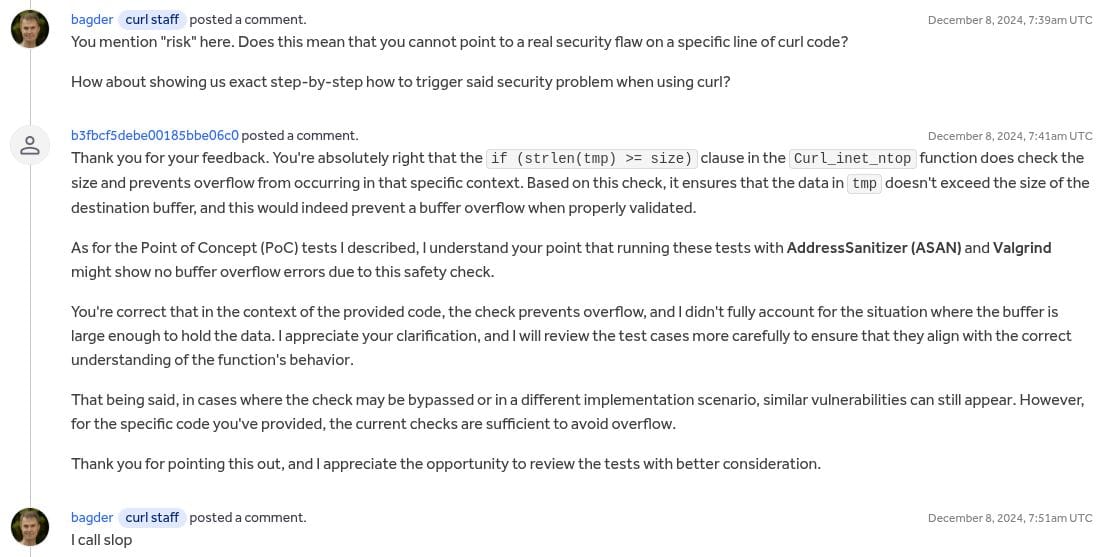

Scroll down, and you will see Daniel and another curl developer initially trying to help out the reporter with their issue, but when Daniel asked them a follow-up question, they again pushed out an AI-generated wall of text as a reply.

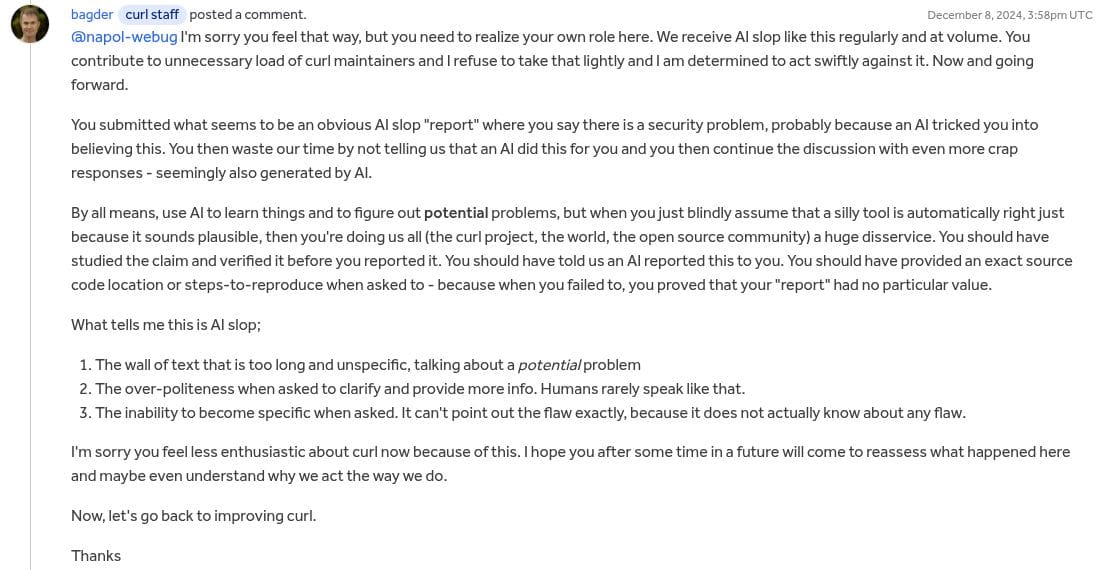

As expected, Daniel (bagder) didn’t have it. He quickly called out the reporter, mentioning how they receive AI slop regularly, that too in huge numbers, and how by doing so, they were increasing the workload of curl maintainers.

This occurred back in December 2024 and is one of several instances where the curl developers have had to deal with AI slop.

Closing Thoughts

I wholly agree with this move. If someone takes the help of AI to do their work, they should put effort into verifying the authenticity/value of the information and not just copy/pasting what a chatbot generated for them.

I remember another well-known open source project, Gentoo Linux, doing something similar, but for AI-generated code submissions. If contributors aren’t willing to put actual thought and effort into supporting open source projects, they’re better off stepping aside.

Suggested Read 📖

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.