The field of AI is as busy as ever, with some new development or the other taking the headlines. I expect this trend to continue into the next year, as many organizations are betting heavily on it.

Digital Ocean, a popular cloud service provider, has now announced something interesting for the AI professionals out there.

HUGS on DO: Easy One-Click AI Model Deployments

Affectionately called “HUGS on DO”, it is a new one-click AI model deployment service made in collaboration with Hugging Face that makes it easy to deploy popular AI models on cutting-edge hardware.

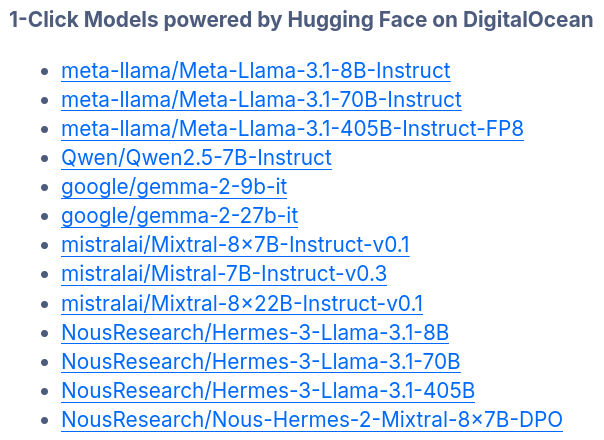

At the time of writing, there was support for models like Meta-Llama-3.1-8B-Instruct, Qwen2.5-7B-Instruct, gemma-2-9b-it, Mistral-7B-Instruct-v0.3, Hermes-3-Llama-3.1-405B, and more.

Hugging Face is responsible for maintaining and updating these models so that Digital Ocean customers can take advantage of the latest and greatest models.

All of those are meant to be deployed on GPU Droplets, which are Digital Ocean's NVIDIA H100-powered virtual servers meant for AI/ML, deep learning, and high performance compute (HPC) use cases.

Currently, these servers are only available from two regions: New York (NYC2) and Toronto (TOR1).

Want To Try It Out?

You can get started with 1-click model deployments by signing up for a Digital Ocean account (partner link). If you would like to learn more about this, then the official announcement blog is also worth a read.

I believe this is a great starting point for enthusiasts who do not want to spend time setting things up, but also want to dip their toes into the world of AI.

What do you think? Let me know your thoughts in the comments!

Suggested Read 📖

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.