IBM has been pushing hard into enterprise AI with a focus on transparent, open source development. The company positions itself as an alternative to closed models, emphasizing responsible AI practices and business deployment.

Earlier this month, IBM launched Granite 4.0 with a hybrid architecture combining Mamba-2 and transformers. The approach proved effective, with this model outperforming their previous 8B versions.

Now IBM has released Granite 4.0 Nano, their smallest AI model yet.

Granite 4.0 Nano: A Compact Powerhouse?

This release was expected. When IBM showed off Granite 4.0's Micro, Tiny, and Small variants earlier this month, they mentioned plans to release Nano models by year-end. Their October 28 announcement delivers on that promise ahead of schedule.

All Granite 4.0 Nano models are released under the Apache 2.0 license, making them freely available for commercial use. They target edge devices and low-latency applications where cloud-based models aren't practical.

The training methodology mirrors the parent Granite 4.0 models. IBM used the same pipelines and over 15 trillion tokens of training data. The models support popular runtimes like vLLM, llama.cpp, and MLX.

Like all Granite language models, the Nano variants carry ISO 42001 certification for responsible model development. This gives enterprises confidence that models follow global standards for AI governance.

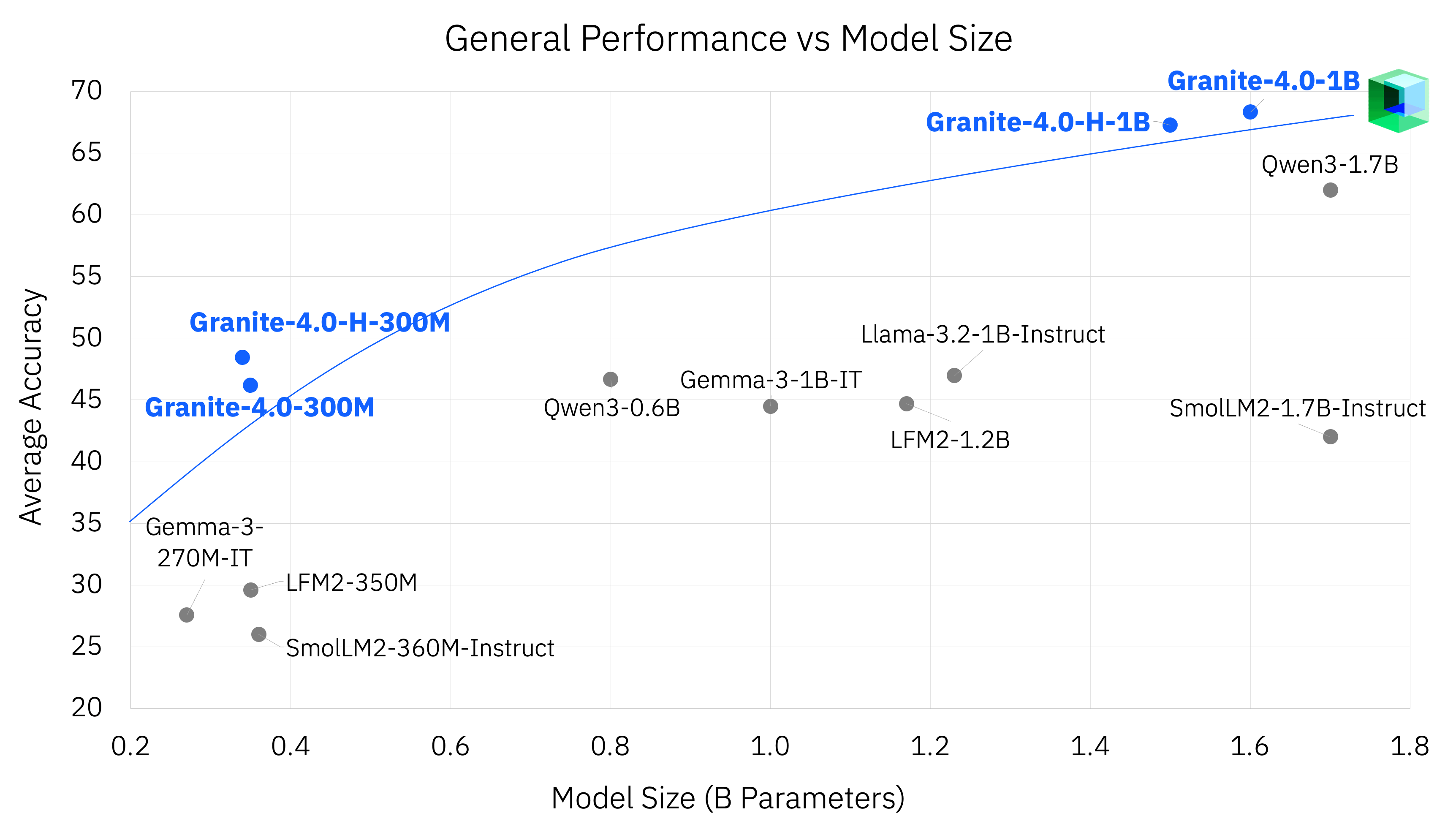

IBM tested Granite 4.0 Nano against competitors like Alibaba's Qwen, LiquidAI's LFM, and Google's Gemma across general knowledge, math, code, and safety benchmarks. The results show Granite Nano outperforming several similarly sized models when averaged across these domains.

The models particularly excel in agentic workflows. On IFEval and Berkeley's Function Calling Leaderboard v3 (BFCLv3), Granite 4.0 Nano outperformed many comparably sized models.

Want To Check It Out?

Granite 4.0 Nano includes four distinct models, each available in both base and instruct variants:

- Granite 4.0 H 1B: Roughly 1.5 billion parameters using hybrid-SSM architecture.

- Granite 4.0 H 350M: Around 350 million parameters with hybrid-SSM architecture.

- Granite 4.0 1B and Granite 4.0 350M: Traditional transformer alternatives for workloads where hybrid architectures lack optimized support.

You can find all eight models in the Granite 4.0 Nano Hugging Face collection.

Suggested Read 📖

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.