Recently, the field of AI has been making some significant strides when it comes to following an open-source approach to development. The proprietary crowd wished they had that kind of close-knit community of developers working towards achieving great things.

In the space, multimodal models have been a major point of interest due to their powerful capabilities, which enable them to process many types of input data, such as text, images, and videos, resulting in richer, more context-aware outputs.

There is always someone or some organization trying to offer a model that is more powerful and tractable than the ones that came before. In this instance, we have a Seattle-based non-profit AI research institute called Ai2 introducing a new family of open-source multimodal models called Molmo.

Let's check it out. 😃

Molmo: Better Than Proprietary Models?

Introduced as a family of state-of-the-art (SOTA) multimodal AI models, Molmo can be used to develop things like a chatbot, interactive educational platforms, content moderation systems, or even AI agents.

As demonstrated by the video above, you could ask a Molmo-powered agent to tell you a joke, help you navigate a new area, convert ideas from a whiteboard into a digital document, and carry out many such everyday tasks.

Molmo has been trained on a dataset called PixMo, which consists of almost 1 million carefully chosen image text pairs with two main categories of data. One is the dense captioning data used in multimodal pre-training, and the other is supervised fine-tuning data, used for enabling a wide range of user interactions.

Molmo consists of four different models with varying capabilities:

- MolmoE-1B

- Molmo-7B-O

- Molmo-7B-D

- Molmo-72B

In this lineup, the 72B is the flagship model, the 7B-O is the most open one, and the 7B-D is the demo model, with the E-1B being the most efficient one. Here, the B's represent the billion parameter metric.

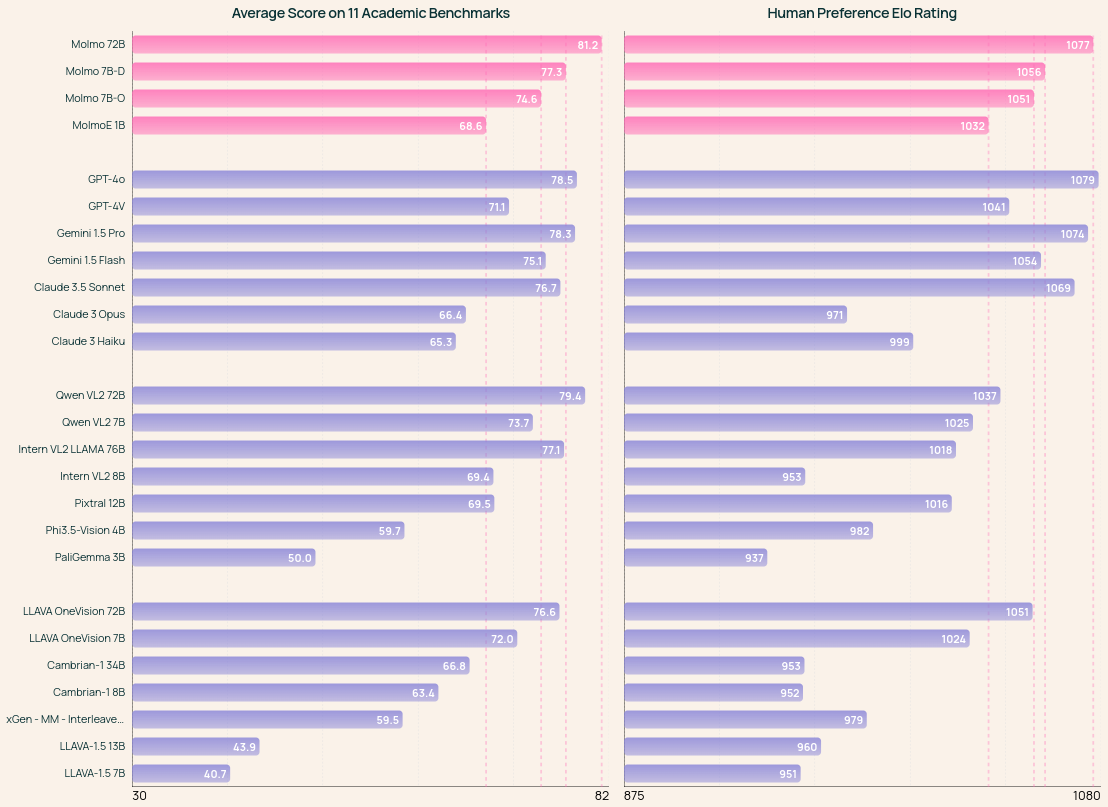

To demonstrate the performance of Molmo, the developers showcased two benchmarks where the four Molmo models were pitched against other, more popular/proprietary models.

On both benchmarks, the Molmo family of models was able to either perform better, or be close in performance to the likes of GPT-4O, Gemini 1.6 Pro, Claude 3.5 Sonnet, Qwen VL2 72B, and Pixtral 12B.

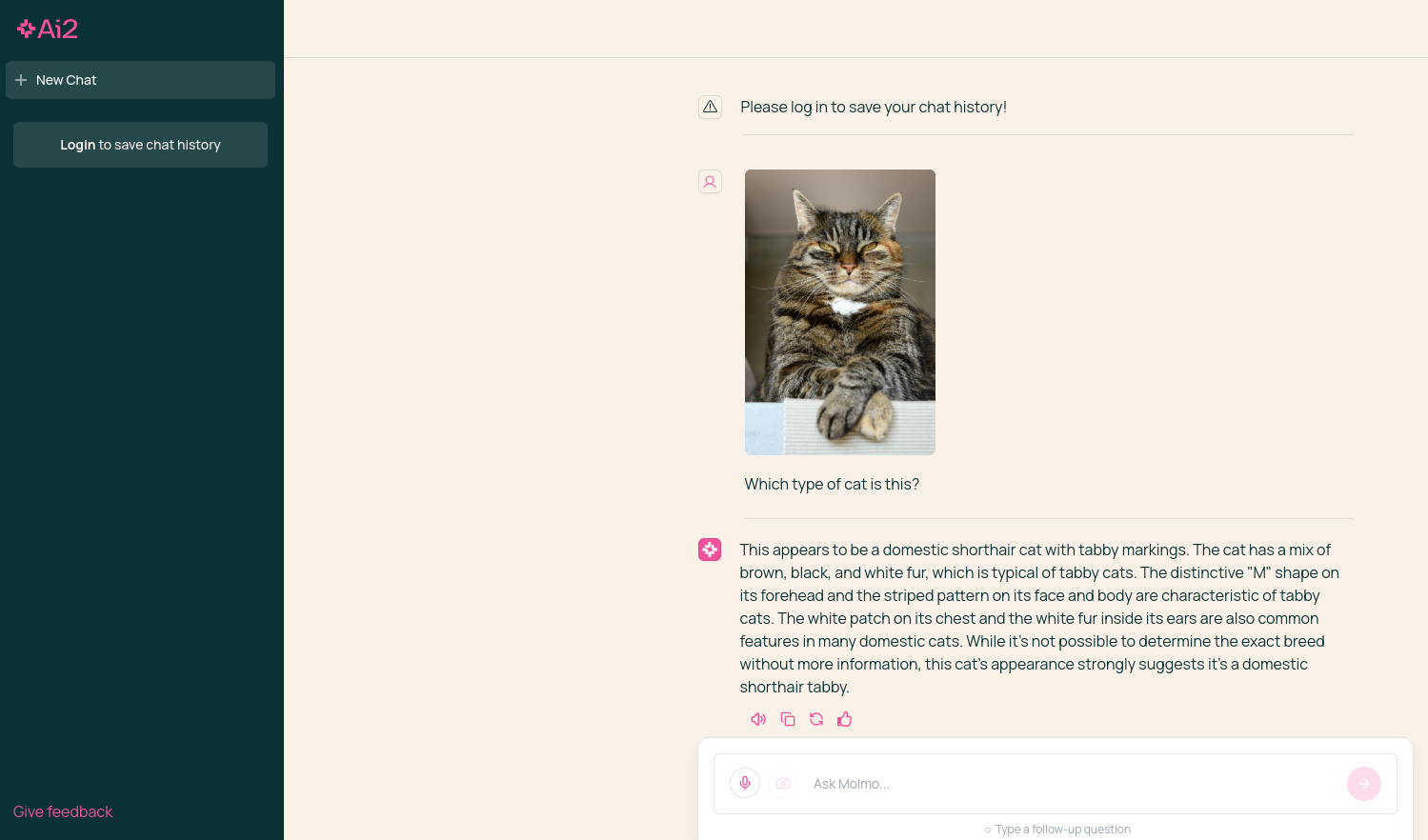

To see how it works, I tested out the demo Ai2 has provided. It was running the Molmo-7B-D model, which was only available for vision-related tasks, and required me to upload an image before it would accept a prompt.

First, I asked it to identify what kind of cat the photo I uploaded had on it. It was able to correctly point out that it was a domestic shorthair cat with tabby markings. It seemed to pull in information from Wikipedia and some other sources.

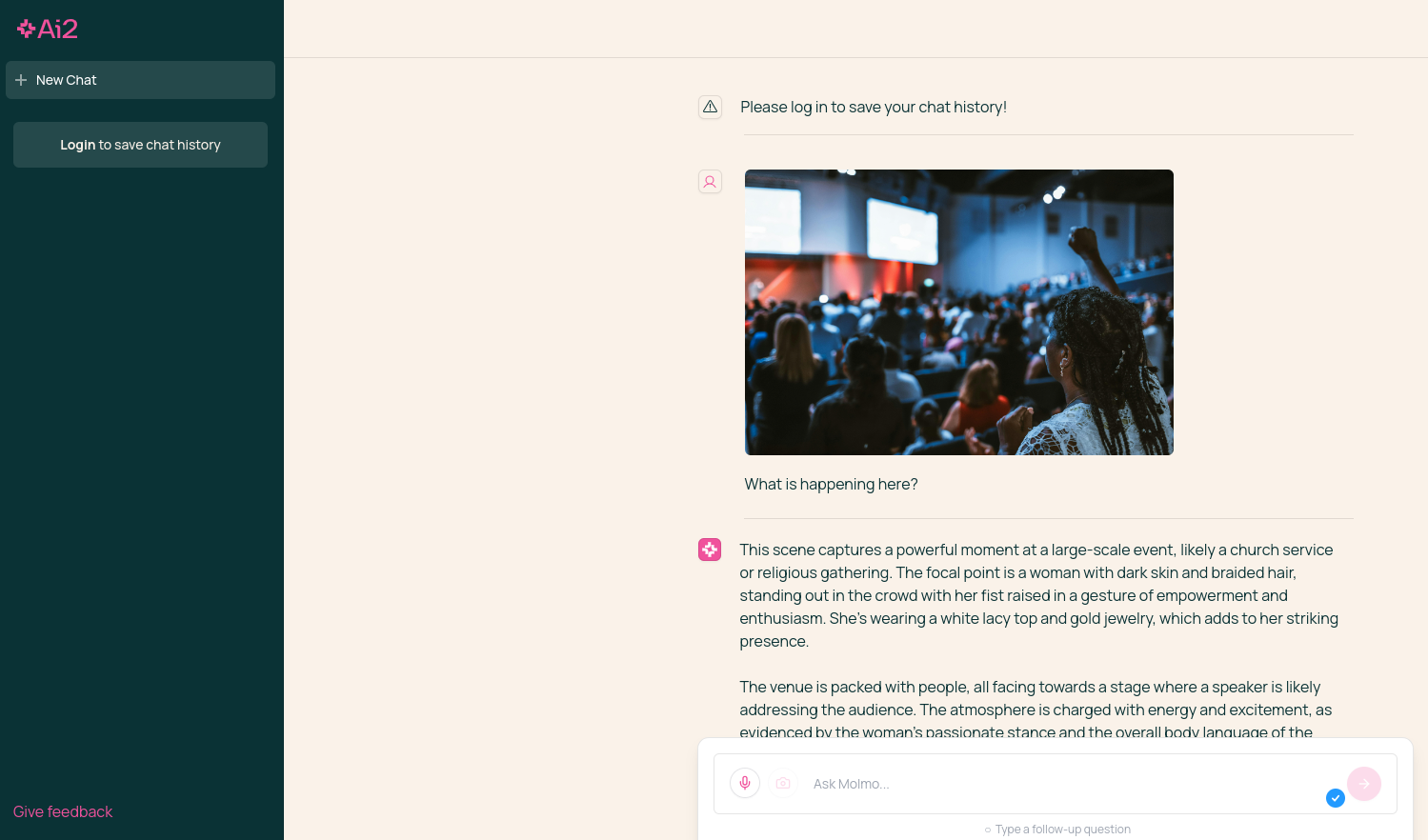

Then, I asked Molmo what was happening in a photo filled with people. It gave me a very relevant answer that it was a large-scale event (unsure about the church service part) with a stage and many people gathered; it even described what the woman in the focus of the photo was wearing and what she was doing.

But, these were some basic questions I asked Molmo to answer. You can ask it for more advanced things like explaining a graphic, fact-checking a photo to ascertain whether it is fake or not, and more such requests.

Want To Try It Out?

There is no requirement to get an API or sign up for a subscription to try Molmo. The developers intend to provide all of their model weights, captioning/fine-tuning data, and source code in the near future for everyone to use.

But, for now, you can check out the demo for Molmo (vision-related tasks only). There is also a Hugging Face page for those who want to get started with any one of those models on their hardware.

For more details on Molmo, you can go through the official blog and paper to get an overall view of things.

💬 In these AI-crazed times, which open-source AI model has caught your attention?

Suggested Read 📖

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.