AI-powered image generation is very useful for creating visual content from text prompts, where it usually combines computer vision and natural language processing to produce outputs based on a user's description of the image.

Even though it takes center stage when we mention AI-generated content, there is another aspect that has been consistently growing alongside, and that's AI-powered image editing.

Many popular editors have already integrated such functionality, with others contemplating adding it. Now, a new open source AI model called OmniGen has been put forward that focuses on image editing via prompt (among other things).

Let's take a peek. 😃

OmniGen: Quick AI-Powered Image Edits

Developed by a group of nine researchers at the Beijing Academy of Artificial Intelligence, OmniGen is an MIT-licensed unified image generation model that can be used to create images by taking in multi-modal prompts.

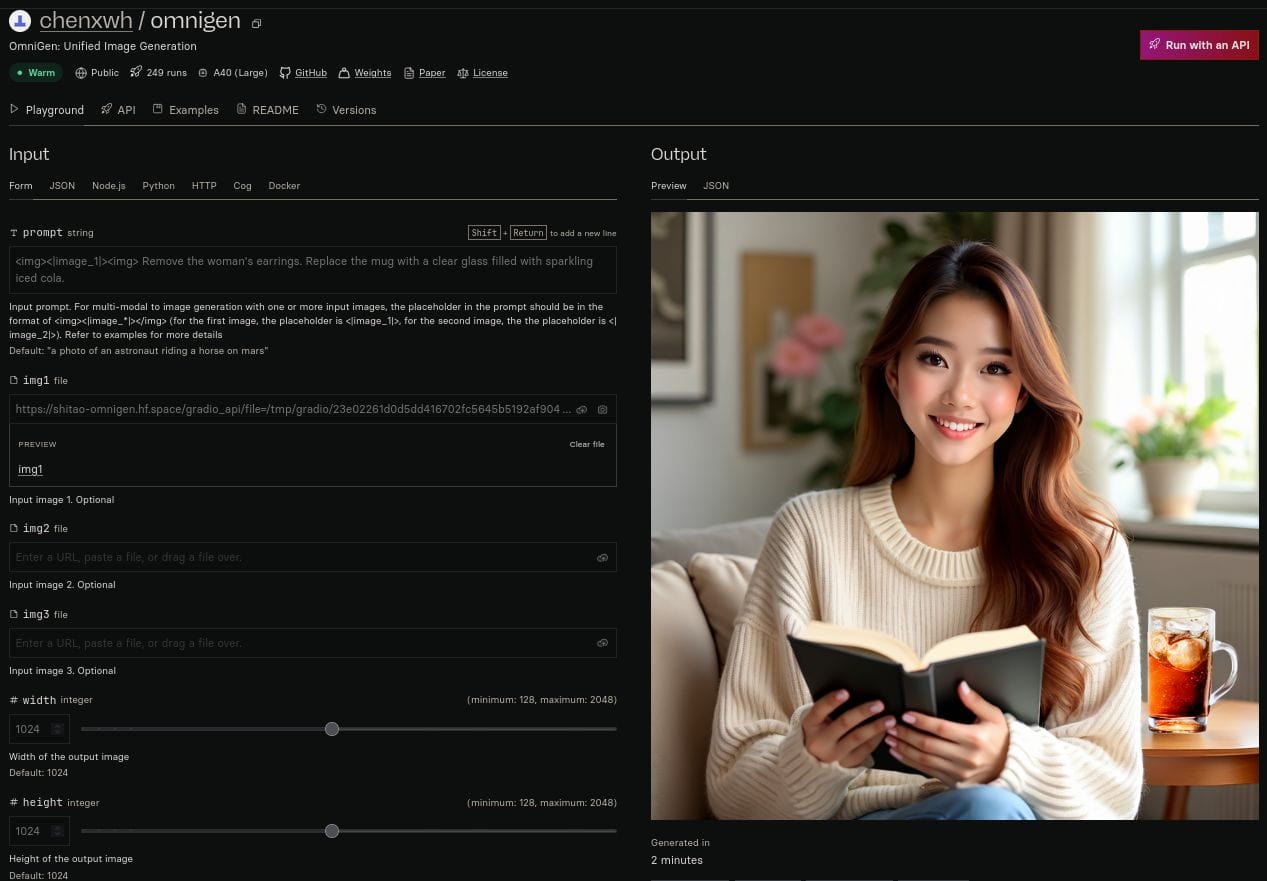

I gave it a quick try, and here's how it went:

OmniGen was able to take in an existing image, and comfortably follow what the prompt said about removing the woman's earrings, and replacing the mug near her with a clear glass filled with sparkling iced cola.

Moreover, it is capable of performing conventional image generation tasks, subject-driven generation, image-conditioned generation, identity-preserving generation, and various other tasks.

The key highlight of this model is that it doesn't require the implementation of additional techniques like ControlNet, IP-Adapter, and Reference-Net, while also doing away with the need to perform preprocessing steps like face detection, pose estimation, cropping, etc.

Its creators believe that:

The future image generation paradigm should be more simple and flexible, that is, generating various images directly through arbitrarily multi-modal instructions without the need for additional plugins and operations, similar to how GPT works in language generation.

What you have seen so far is the first stable version of OmniGen. The developers have stated that they are continually improving it, and hope that it can act as an inspiration for other universal image-generation models.

Want To Check It Out?

You can dive deeper into OmniGen's technical aspects by going through its technical paper, with the source code being hosted over at GitHub.

The developers have provided a limited-use live demo via Hugging Face, where you will also find the model card for OmniGen. You can also use ComfyUI to run it too.

Via: Decrypt

Suggested Read 📖

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.