Not a day goes by without me reading about some new developments in the rapidly evolving field of Artificial Intelligence (AI). Be it big multinationals like Meta getting called out for being misleading, or an AI startup receiving funding by copying another's work.

So far, this space has been full of surprises and controversies, with such happenings showing no signs of stopping anytime soon. Now, we have two rather interesting pieces of news to report from the house of IBM.

Let's see what they have been up to.

Upgraded Red Hat Enterprise Linux AI (RHEL AI)

We kick things off in this two-part article with the RHEL AI 1.2 release, which has arrived just over a month after the initial release with loads of new improvements that focus on making things easier for AI developers and researchers.

With this release, there is now support for AMD's Instinct Accelerators, including full integration with the ROCm software stack, and newly added availability on Azure and GCP.

Similarly, the developers have worked on adding support for Lenovo ThinkSystem SR675 V3 servers, bringing automatic detection of hardware accelerators, and introducing PyTorch FSDP for enhancing training tasks.

There's also a new training checkpoint and resume feature that lets users save their long training runs so that they can return to working on them later, saving time and resources.

Suggested Read 📖

Get RHEL AI 1.2

You can grab the latest RHEL AI release from the official website, with the announcement blog consisting of all the relevant information on it.

Another thing to note is that support for RHEL AI 1.1 will be deprecated by the second week of November, and users are advised to upgrade to the 1.2 release to continue receiving support.

Introduction Of Granite 3.0

Next up on the agenda is IBM's newly released family of open source LLMs, Granite 3.0.

Acting as the third iteration for the popular AI model family, Granite 3.0 is touted by IBM to be better than LLMs provided by other organizations on both academic and industrial grounds.

Released under the Apache 2.0 license, there are a total of ten models under the Granite 3.0 family, which cover many use cases.

For general purpose or language tasks, there are models like the Granite 3.0 8B Instruct, Granite 3.0 2B Instruct, Granite 3.0 8B Base, and Granite 3.0 2B Base.

For running models on CPU-based systems or for low latency applications, there are Mixture Of Experts (MoE) models such as the Granite 3.0 3B-A800M Instruct, Granite 3.0 1B-A400M Instruct, Granite 3.0 3B-A800M Base, and Granite 3.0 1B-A400M Base.

And, finally, there are two guardrail/safety-focused models, the Granite Guardian 3.0 8B and Granite Guardian 3.0 2B. These are used to detect and prevent harmful content/inputs, and can be deployed alongside any open or proprietary AI models.

All of these join an ever-expanding list of open source LLMs that can be used freely for both research and commercial use cases.

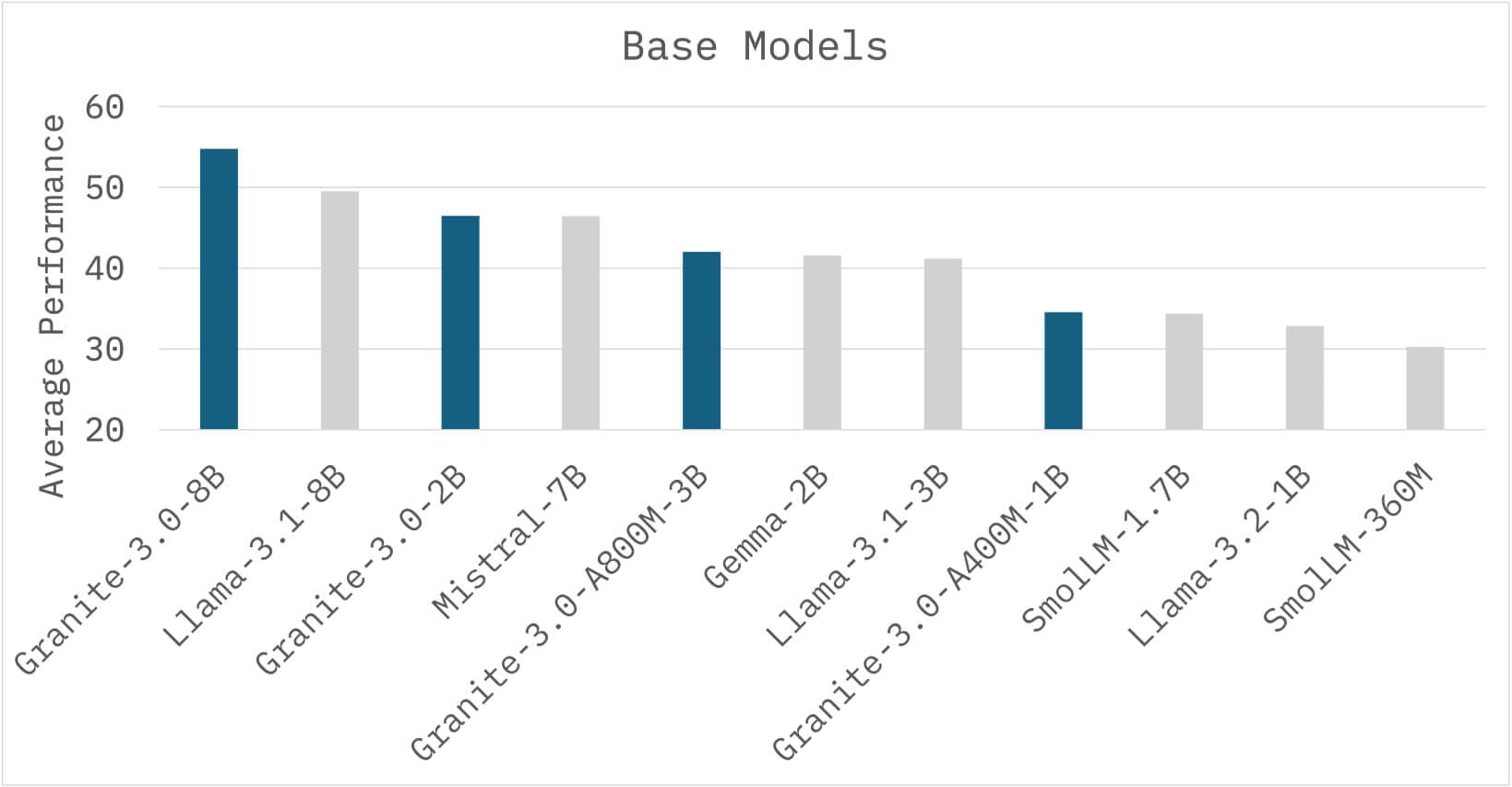

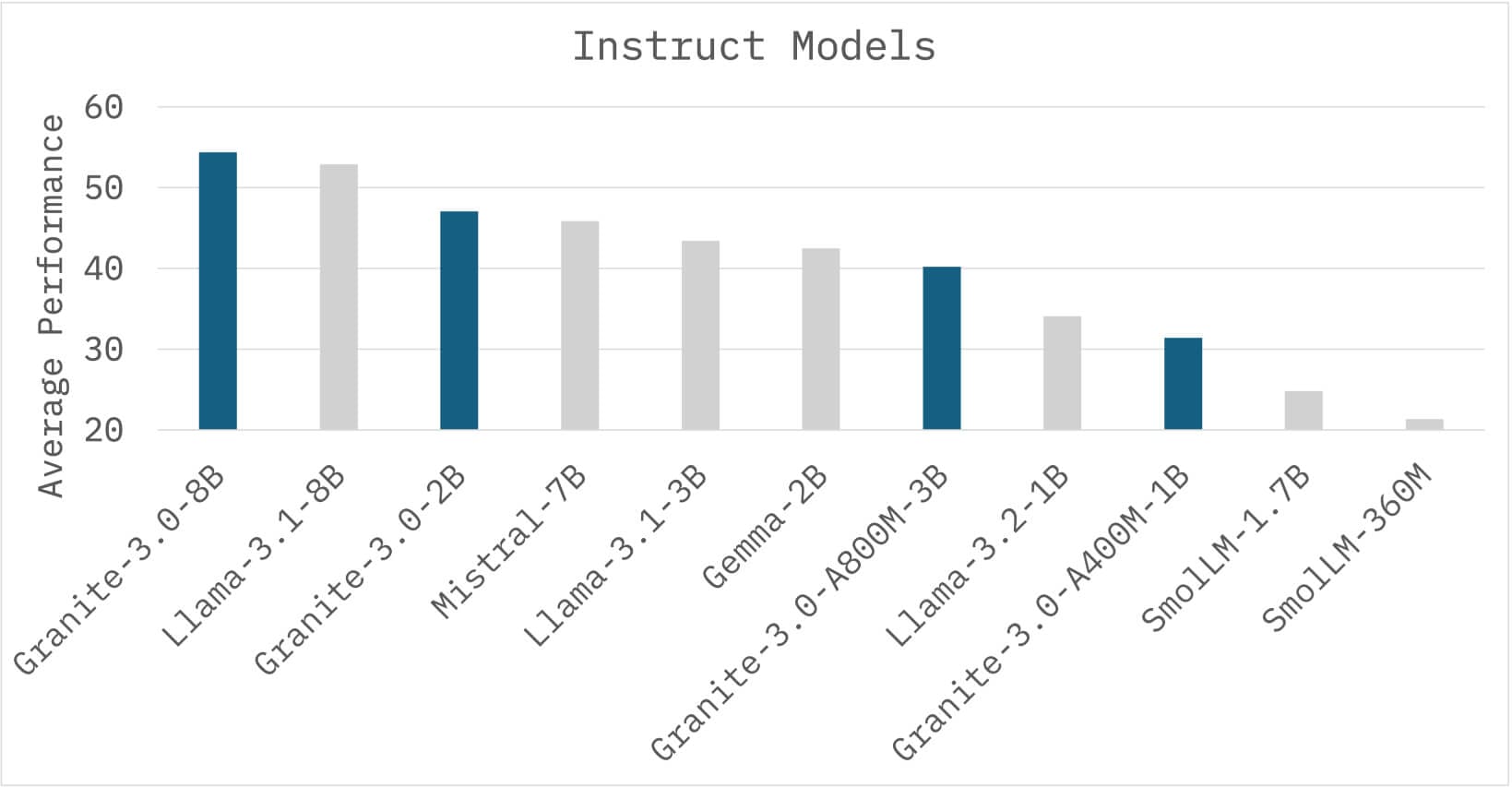

If you are searching for the numbers behind IBM's claims, here are some benchmarks for the Base and Instruct models shared by them. 👇

Source: IBM

According to IBM, the data used to train these models has been gathered from various curated sources that include things like unstructured natural language text, code data, a collection of synthetic datasets, and many publicly available datasets with permissive licenses.

Get Granite 3.0

If you are interested in deploying any one of the Granite 3.0 models, then you can get started by visiting the Hugging Face collections page for this family of models.

Alternatively, you can source the models from the project's GitHub repo. If you just want a quick hands-on, then IBM has also provided a live demo of the Granite 3.0 8B Instruct model on the Granite playground.

If you are keen on learning more about the Granite 3.0 family of models, then you can go through the extensive technical paper and announcement blog.

💬 Looking forward to these new releases? Let me know your thoughts in the comments below!

Suggested Read 📖

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.