AI has been growing at an astonishing pace; some might revere it, some might not. However, one thing is now clear: There is a need to keep AI in check.

Which is why we require tools and services built for the job, particularly ones that are open-source, as doing so helps promote transparency.

In recent times, many governments around the world have been warming up to the concept of open-sourcing important tools and services to benefit the general population.

One such move from the UK Government has caught our attention. With a recent press release, they have open-sourced an AI safety tool, “Inspect”, for the global community to adopt and utilize it.

Inspect: What to Expect?

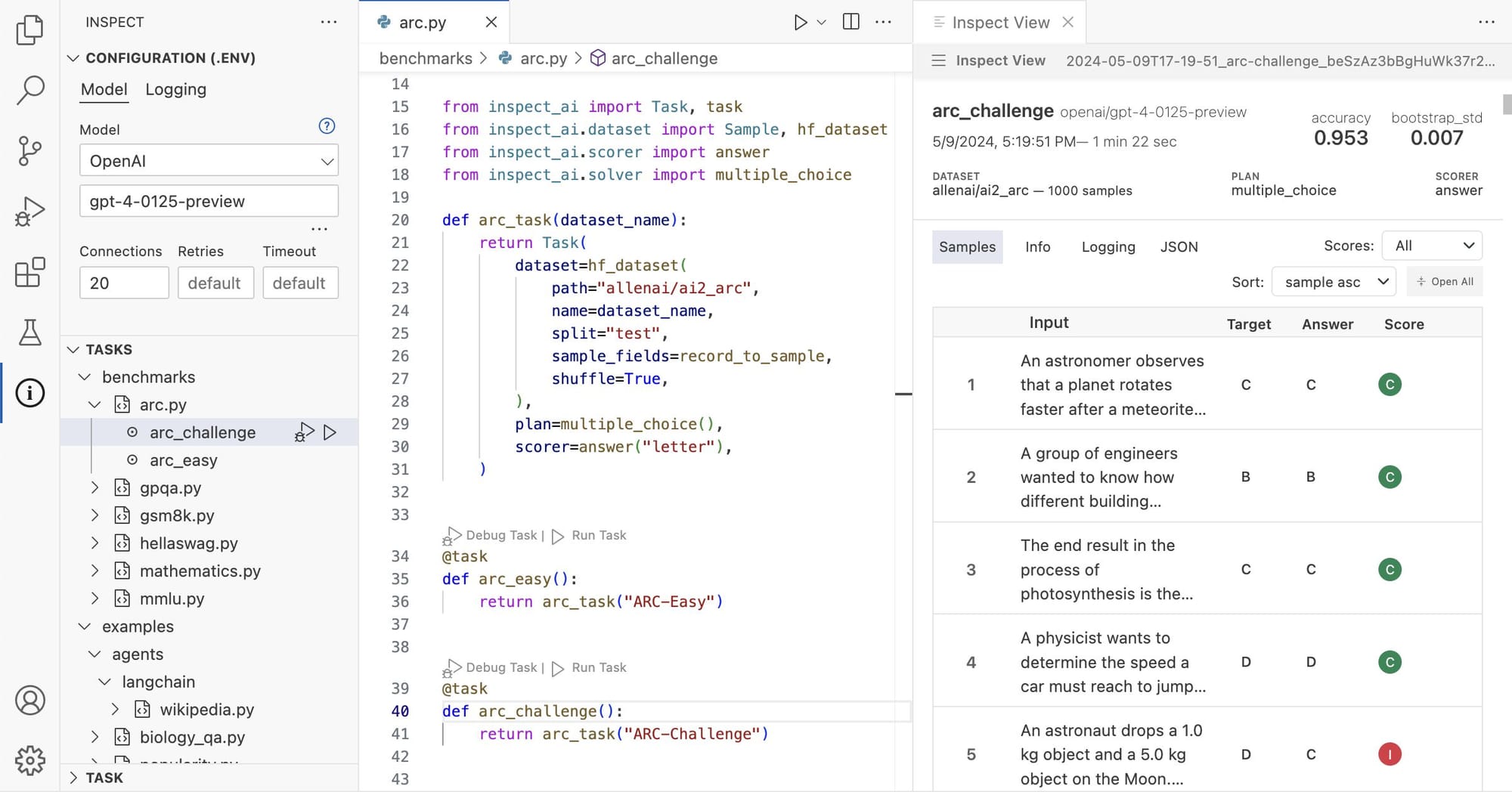

Spearheaded by the AI Safety Institute, Inspect is a safety evaluation tool that has been open-sourced under the MIT License, allowing anyone to copy, modify, merge, publish, distribute, sublicense, and/or sell copies of it.

This tool allows anyone to test specific elements of AI models, such as their ability to reason, their autonomous capabilities, and their core knowledge, to generate a score based on the results that best fit the AI model in question.

If you were wondering how Inspect facilitates that, the evaluations have three key aspects: The first are the Datasets, which consist of a set of standardized samples for evaluation.

Then, there are the Solvers, which carry out the actual evaluation, and finally, the Scorers, which take the output from the Solvers and give out the final result.

Moments after the announcement, CEO of Hugging Face, Clément Delangue chimed in on a Twitter thread by Ian Hogarth, Chair of the AI Safety Institute, saying that:

This is very cool, thanks for sharing openly! Wonder if there’s a way to integrate with https://t.co/XZn1kgwGFM to evaluate the million models there or to create a public leaderboard with results of the evals (ex: https://t.co/ZkSmieEPbs) cc @IreneSolaiman @clefourrier

— clem 🤗 (@ClementDelangue) May 10, 2024

An idea to create a public leaderboard of AI safety evaluation results? That would be pretty useful!

If this were to become a reality, people around the globe could easily look into how different AI models perform in terms of safety evaluations on one of the most popular open AI communities out there.

As things stand now, Inspect also has the opportunity to be an influential tool in how governments handle AI.

Want to check it out?

You can do that by visiting the official website, where you will find instructions on how you can configure Inspect on the platform of your choice.

If you are interested in the source code, head to its GitHub repo.

💬 Would this encourage other countries and organizations to come up with safety evaluation systems for AI models, and probably introduce some kind of certifications for it? Let me know your thoughts on this.

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.