We are no strangers to Big Tech platforms occasionally reprimanding us for posting Linux and homelab content. YouTube and Facebook have done it. The pattern is familiar. Content gets flagged or removed. Platforms offer little explanation.

And when that happens, there is rarely any recourse for creators.

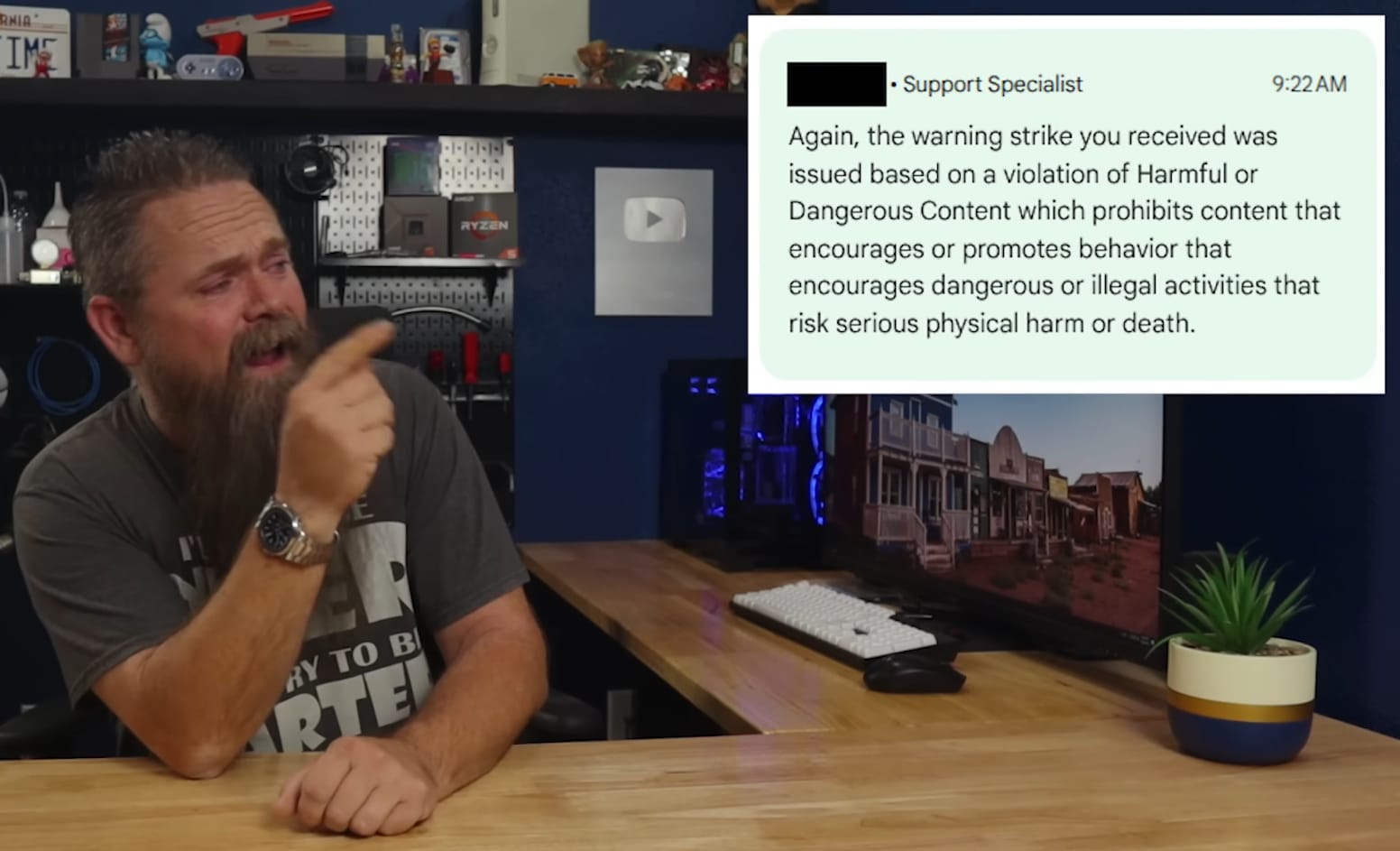

Now, a popular tech YouTuber, CyberCPU Tech, has faced the same treatment. This time, their entire channel was at risk.

YouTube's High-Handedness on Display

Two weeks ago, Rich had posted a video on installing Windows 11 25H2 with a local account. YouTube removed it, saying that it was "encouraging dangerous or illegal activities that risk serious physical harm or death."

Days later, Rich posted another video showing how to bypass Windows 11's hardware requirements to install the OS on unsupported systems. YouTube took that down too.

Both videos received community guidelines strikes. Rich appealed both immediately. The first appeal was denied in 45 minutes. The second in just five.

Rich initially suspected overzealous AI moderation was behind the takedowns. Later, he wondered if Microsoft was somehow involved. Without clear answers from YouTube, it was all guesswork.

Then came the twist. YouTube eventually restored both videos. The platform claimed its "initial actions" (could be either the first takedown or appeal denial, or both) were not the result of automation.

Now, if you have an all-organic, nature-given brain inside your head (yes, I am not counting the cyberware-equipped peeps in the house). Then you can easily see the problem.

If humans reviewed these videos, how did YouTube conclude that these Windows tutorials posed "risk of death"?

This incident highlights how automated moderation systems struggle to distinguish legitimate content from harmful material. These systems lack context. Big Tech companies pour billions into AI. Yet their moderation tools flag harmless tutorials as life-threatening content. Another recent instance is the removal of Enderman's personal channel.

Meanwhile, actual spam slips through unnoticed. What these platforms need is human oversight. Automation can assist but cannot replace human judgment in complex cases.

Suggested Reads 📖

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.