Brave browser is one among the best free and open-source web browser based on Chromium.

Sure, it has its quirks and a bit of a controversial history of crypto tech being integrated to it. But, it is still arguably one of the fastest and privacy-focused browsers out there.

To make things right, it has tried to approach the AI integration to its browser in an interesting manner.

Earlier this year, Brave started experimenting with a "Bring Your Own Model (BYOM)" for Brave Leo's AI functionality on its nightly (or development) releases. This idea aimed to give users the ability to run custom AI models or local AI models to use Brave Leo safely.

With this feature, you do not have to trust Brave's integrated AI model or any remote connections. You can just slide in your favorite local model using Ollama, and keep the conversations on your device 🔒

And, now, this functionality is finally available on Brave 1.69 or higher. My colleague spotted this on 1.70, and I believe it is worth pointing it out 🤯

Use Your Custom Local or Remote AI Models

I tested Brave's BYOM feature using a local AI model, i.e., llama3. You can utilize Ollama to download the model on Linux or other platforms.

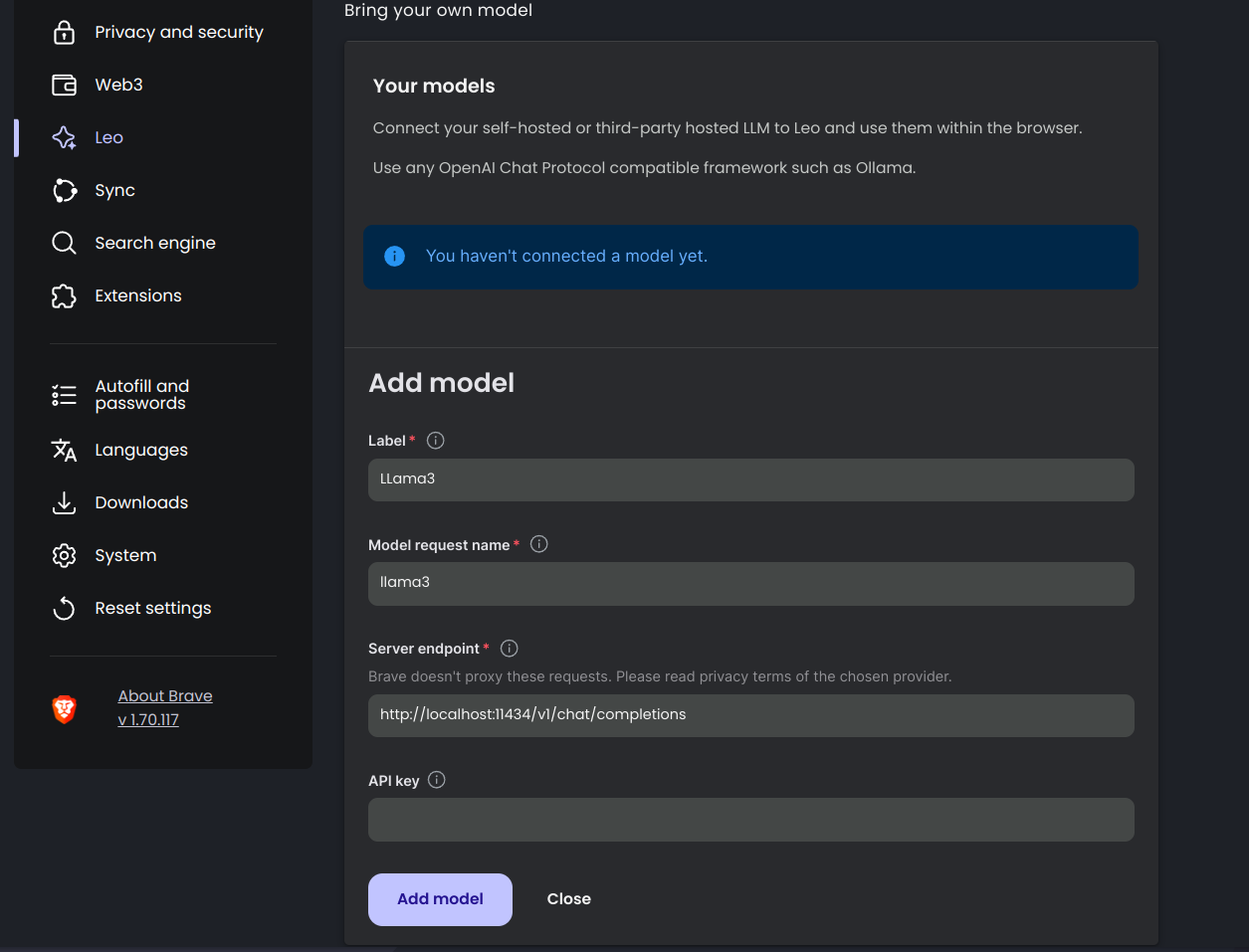

Once done, head to Brave's Leo settings to add the model using the following information:

- Label: Any custom display name that you see fit

- Model request name: Put the model name here

- Server endpoint: The endpoint URL provided for the AI model. In our case, it is http://localhost:11434/v1/chat/completions (for local models via Ollama).

- API key: If you are using a remote AI model, like OpenAI's ChatGPT or something else. For local AI models, you do not need it.

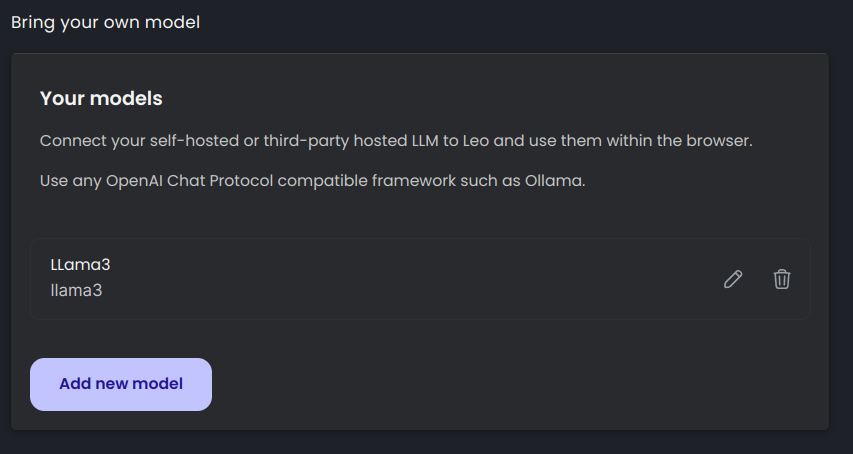

As soon as you add it, you can see it listed as:

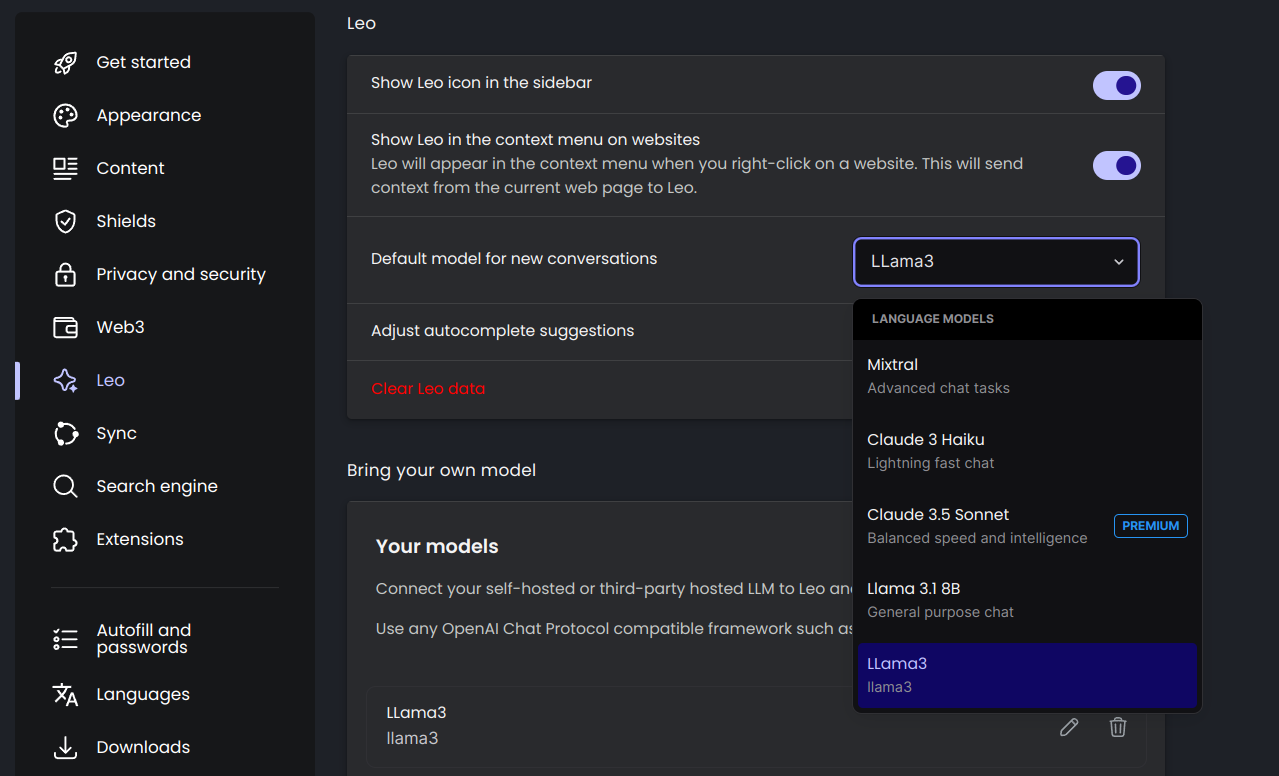

Next, you need to set it as the "Default model for new conversations" in the same settings and restart the browser for Brave Leo to use it. The screenshot below should guide you how to do that:

Or, you can just select the custom AI model when using Brave Leo, as shown below:

Wrapping Up

The most exciting thing about Brave's BYOM feature is that you no longer require a separate Web UI to run LLMs. Of course, Page Assist is one of my favorites if you want to try using local AI models on other browsers. But, if you are using Brave, you no longer require that.

I think with this functionality, Brave gives you a good control of what AI model you would like to use (how you would like to use) and when you do not. It is better than a forced integration by any web browser for you to use AI assistance.

💬 What do you think about Brave's new approach to AI integration? Share your thoughts in the comments below!

Suggested Read 📖

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.