Warp is a Rust-based, cross-platform terminal app that tries to “reimagine with AI and collaborative tools for better productivity”. Its adoption has been growing consistently, with many users finding its AI-powered features under the Warp AI umbrella useful.

Coming off the introduction of Warp for ARM, the developers have introduced a new feature to Warp AI called “Agent Mode”, which, they claim, embeds LLM directly into the terminal for “multi-step workflows”.

Let's check it out.

Agent Mode: What's The Buzz About?

A new way to interact with AI on Warp, Agent Mode, understands plain English text (including commands) to suggest code, give outputs to guide you, autocorrect itself, learn/integrate with any service that hosts public documentation or a help page, and more.

Thanks to its natural language prowess, users can simply ask it to do things, similar to having a conversation with a colleague or a subject-matter expert. It has tight integration with the CLI, enabling it to interface with any service without any extra configuration overhead.

The developers add that:

If the service has a CLI, an API, or publicly available docs, you can use Agent Mode for the task. Agent Mode has inherent knowledge of most public CLIs, and you can easily teach it how to use internal CLIs by asking it to read their help content.

They also stress that auto-detection of natural language happens locally, data is only sent to their servers when you proceed with the request to process the entered data with Warp AI. You can also disable auto-detection, if you'd like, but, it's enabled by default.

They clarified that part with an example:

If Warp AI needs the name of your git branch, it will ask to run git branch and read the output. You will be able to approve or reject the command. We’ve designed Agent Mode so that you know exactly what information is leaving your machine.

Of course, it's no secret that Warp AI is powered by OpenAI, so naturally all the input is sent to them, with Warp assuring that OpenAI doesn't train their models on such data. For users who don't prefer that, they could switch their LLM provider by opting for Warp's enterprise plan.

If you were wondering how Agent Mode could help, here's an example given by the developers.

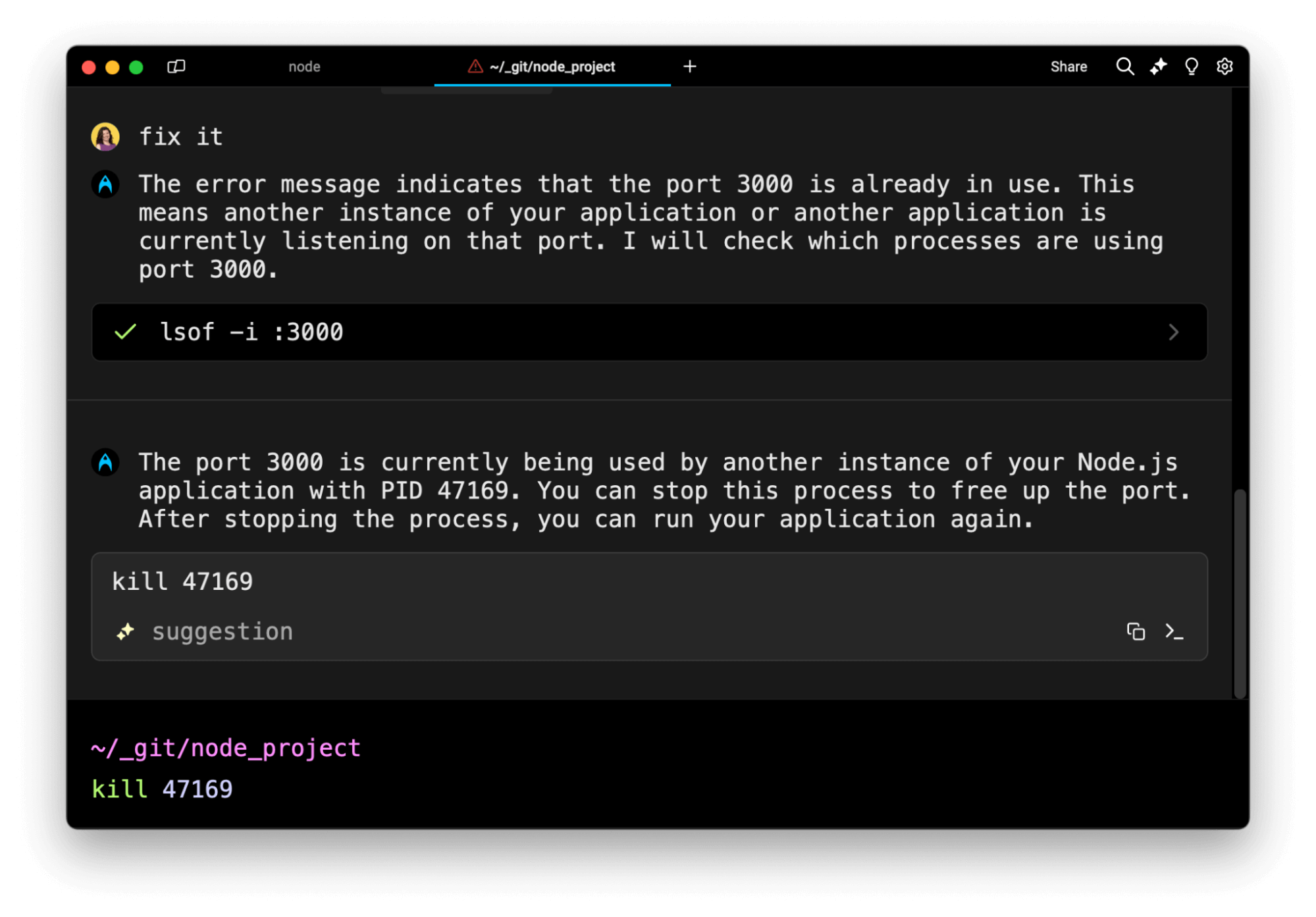

They illustrate how you can deal with the “port 3000 already taken” error.

After attaching context to a query, Users can type something like “fix it”, and Agent Mode will start working on providing solutions 🤯

Here's a brief demo of its AI agent feature that we tried ourselves.

Get Warp

If this new feature of Warp interests you, then you can head to its official website, where you will find packages for Linux and macOS, with the Windows app coming soon.

Users of every plan tier will be able to access this, with the free plan including up to 40 AI requests per month, and users of the paid plans having more.

For existing users, you will now see the new Agent Mode replacing the first-gen Warp AI chat panel, with the “type # to ask Warp AI” feature being retained for the short-term, with plans to retire it in the near future as Agent Mode matures.

If you would like to learn more, the official announcement blogdocumentation has further information.

💬 I know most of you do not like proprietary apps like this. But, if you give Warp a try, do let me know in the comments below.

- Even the biggest players in the Linux world don't care about desktop Linux users. We do.

- We don't put informational content behind paywall. Your support keeps it open for everyone. Think of it like 'pay it forward'.

- Don't like ads? With the Plus membership, you get an ad-free reading experience.

- When millions of AI-generated content is being published daily, you read and learn from real human Linux users.

- It costs just $2 a month, less than the cost of your favorite burger.

Become a Plus Member today and join over 300 people in supporting our work.